Inside Out

Two Jointly Predictive Models for Word Representations and Phrase RepresentationsProblem

Distributional hypothesis lies in the root of most existing word representation models by inferring word meaning from its external contexts. However, distributional models encounter their limitations in answering questions like:

- What if there were no context for a word?

- Which word is more close to buy, buys or sells?

Motivation

In fact, this question is not difficult to answer if we take the internal structures of words (morphological information) into account.

However, the limitation of morphology is that it cannot infer the relationship between two words that do not share any morphemes.

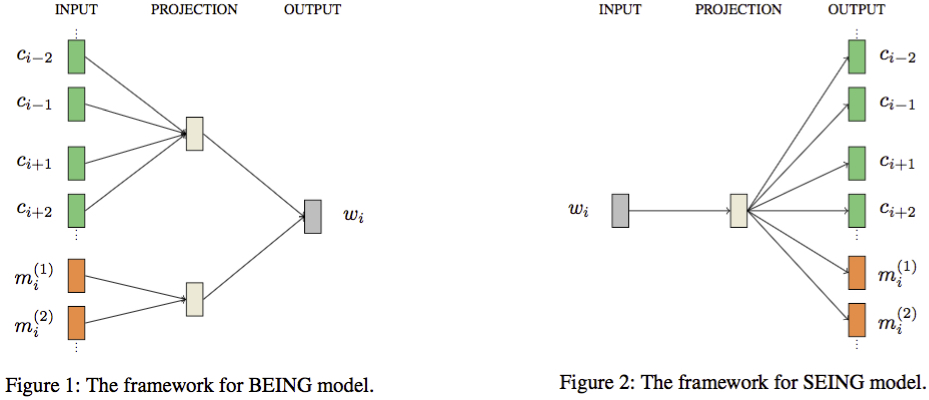

Considering the advantages and limitations of both approaches, we propose two novel models to build better word representations by modeling both external contexts and internal morphemes in a jointly predictive way, called BEING and SEING.

These two models can also be extended to learn phrase representations according to the distributed morphology theory.

Methods

Our methods are built on the basis of Continuous Bag-of-Words (CBOW) model and Skip-Gram (SG) model. In a nutshell, we view the two sources, i.e., internal morphemes and external contexts, equivalently in inferring word representations, and model them in a general predictive way.

Data

- Pre-trained word vectors

- Training corpus: Wikipedia 2010

Code

Paper

Fei Sun, Jiafeng Guo, Yanyan Lan, Jun Xu, and Xueqi Cheng. Inside Out: Two Jointly Predictive Models for Word Representations and Phrase Representations. In Proceedings of the 30th AAAI conference.

Citation

@inproceedings{Fei:Inside,

author = {Fei Sun and Jiafeng Guo and Yanyan Lan and Jun Xu and Xueqi Cheng},

title = {\textit{Inside Out}: Two Jointly Predictive Models for Word Representations and Phrase Representations},

booktitle = {Proceedings of the 30th AAAI conference},

year = {2016},

location = {Phoenix, Arizona USA},

}